命令格式

1 | MIN HOUR DOM MON DOW CMD |

查看

1 | crontab -l |

编辑

1 | crontab -e |

启动时运行

1 | @reboot /home/ubuntu/app/consul/start.sh |

时间格式

1 | MIN HOUR DOM MON DOW CMD |

设置 PATH

1 | crontab -e # 开头添加如下内容 |

1 | MIN HOUR DOM MON DOW CMD |

1 | crontab -l |

1 | crontab -e |

1 | @reboot /home/ubuntu/app/consul/start.sh |

1 | MIN HOUR DOM MON DOW CMD |

1 | crontab -e # 开头添加如下内容 |

1 | pip3 install wheel setuptools |

setup.py1 | from setuptools import setup |

setup.py 示例

1 | import os.path |

1 | python setup.py bdist_wheel --universal |

1 | import urllib.request |

1 | q = urllib.request.Request(url=url, method='GET') |

1 | export PATH=/opt/scylladb/bin:$PATH |

1 | warning |

-Wall1 | -Waddress -Warray-bounds (only with -O2) -Wc++0x-compat -Wchar-subscripts |

-Wextra1 | -Wclobbered -Wempty-body -Wignored-qualifiers -Wmissing-field-initializers |

1 | -Wall -Wextra -Waggregate-return -Wcast-align -Wcast-qual -Wdisabled-optimization -Wdiv-by-zero -Wendif-labels -Wformat-extra-args -Wformat-nonliteral -Wformat-security -Wformat-y2k -Wimplicit -Wimport -Winit-self -Winline -Winvalid-pch -Wjump-misses-init -Wlogical-op -Werror=missing-braces -Wmissing-declarations -Wno-missing-format-attribute -Wmissing-include-dirs -Wmultichar -Wpacked -Wpointer-arith -Wreturn-type -Wsequence-point -Wsign-compare -Wstrict-aliasing -Wstrict-aliasing=2 -Wswitch -Wswitch-default -Werror=undef -Wno-unused -Wvariadic-macros -Wwrite-strings -Wc++-compat -Werror=declaration-after-statement -Werror=implicit-function-declaration -Wmissing-prototypes -Werror=nested-externs -Werror=old-style-definition -Werror=strict-prototypes |

1 | pid=$(pgrep ranker_service) |

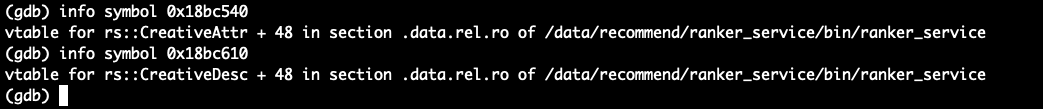

dump

1 | gdb bin/ranker_service core.9085 |

统计 vtable

1 | hexdump result.bin | awk '{printf "%s%s%s%s\n%s%s%s%s\n", $5,$4,$3,$2,$9,$8,$7,$6}' | sort | uniq -c | sort -nr > hex.t |

1 | 自认证证书 |

或者

1 | openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /etc/ssl/private/nginx-selfsigned.key -out /etc/ssl/certs/nginx-selfsigned.crt |

把生成的整数文件放到 /certs 目录下。

1 | sudo mkdir /certs |

生成 dhparam.pem 文件。

1 | openssl dhparam -out /certs/dhparam.pem 4096 |

创建 /etc/nginx/snippets/self-signed.conf ,输入如下内容。

1 | ssl_certificate /certs/nginx-selfsigned.crt; |

设置 nginx site 配置。

1 | server { |

按需配置。

1 | server { |

1 | yum install gperftools gperftools-devel -y |

1 | dump |

[toc]

1 | sudo kubeadm reset cleanup-node # master 节点也可以清除 |

1 | 查看 node 标签 |

设置 pod 的 node 标签选择(nodeSelector)

1 | apiVersion: v1 |

设置 deployment node 标签选择

1 | spec: |

1 | kubectl delete --all deployments d-n=<ns> |

1 | 查看 pod 信息 |

1 | 列出所有 service |

1 | microk8s kubectl create token -n kube-system default --duration=8544h |

1 | docker login |

1 | 创建 |

1 | 列出所有的 namespace |

1 | kubectl exec --stdin --tty <pod-instance> -- /bin/bash |

float [0, 1]绘制子图。

原型

1 | subplot(nrows, ncols, index, **kwargs) |

示例

1 | import matplotlib.pyplot as plt |

1 | # 数据来自鸢尾花数据集,绘制散点图 |

Matplotlib

1 | sudo pip3 install matplotlib |

1 | import numpy as np |

运行结果

1 | #!/bin/python |

1 | from cycler import cycler |

1 | #### way 1 |