- 余秋雨

- 《文化苦旅》

- 《吾家小史》

- 《何谓文化》

- 《极端之美》

- 《千年一叹》

- 《山河之书》

- 《行者无疆》

- 《中国文脉》

curl

参数

1 | -X 指定请求方法 |

示例

1 | 格式 |

etcd

数据模型

etcd 旨在可靠地存储不常更新的数据,并提供可靠的监控查询。

nginx 日志分析

日志文件合并

1 | 对于 gz 格式日志文件,使用 gzcat |

分析

goaccess

安装

使用 brew 或下载。

1 | brew install goaccess |

日志格式匹配

nginx 日志格式配置需要转换为 goaccess 可识别的日志格式,下面是示例。

| nginx 配置 | goaccess 格式配置 |

|---|---|

$remote_addr - $remote_user [$time_local] "$request" $status $body_bytes_sent "$http_referer" "$http_user_agent" "$http_x_forwarded_for" "$request_body" "$upstream_addr" $upstream_status $upstream_response_time $request_time |

log-format %h %^ [%d:%t %^] "%r" %s %b "%R" "%u" "%^" "%^" "%^" %^ %^ %Tdate-format %d/%b/%Ytime-format %T |

$remote_addr - $remote_user [$time_local] "$request" $status $body_bytes_sent "$http_referer" "$http_user_agent" $request_time $upstream_addr $upstream_response_time $pipe |

%h %^[%d:%t %^] "%r" %s %b "%R" "%u" %T %^ |

日志格式配置

分析前,需要配置日志格式。日志格式可以在 ~/.goaccessrc 文件配置,也可以在命令行中指定。

1 | ~/.goaccessrc |

离线分析

1 | 使用 ~/.goaccessrc 文件配置日志格式 |

如果出错,可以尝试去掉

LANG="en_US.UTF-8"

spring boot maven

单个可执行 jar 包

1 | <plugin> |

assemble.xml

1 | <assembly xmlns="http://maven.apache.org/ASSEMBLY/2.1.0" |

openstack network

网络

网络类型设置主要参考两篇文章,设置网络硬件接口 provider 和 设置自服务虚拟网络。

类型

- local

- flat

- vlan

- vxlan

- gre

- geneve

连接外网 & 分配外网IP

openstack 配置的是 self-service 网络模式,外网是指虚拟网络外的网络,这里是路由器的网络 192.168.6.1/24。

配置

物理机网络接口设置

这里以 ubuntu 20.04 为例,物理机有两个网口(enp4s0、enp5s0)。

1 | /etc/netplan/99-config.xml |

这里是把 enp5s0 网口设置为固定 ip,关闭 enp4s0 的 dhcp 且不分配 ip。

neutron 的配置要关注 /etc/neutron/plugins/ml2 的内容。

1 | cat ml2_conf.ini | grep -v '#' | grep '\S' |

在 linuxbridge_agent.ini 配置的 physical_interface_mappings = provider:enp4s0 中的 provider:enp4s0 ,冒号前面是新增的接口名称,enp4s0 为系统显示的硬件网络接口。

在 ml2_conf.ini 中配置 flat_networks = provider ,指定 flat 类型接口使用的网络接口。

设置多值

physical_interface_mappings = provider:enp4s0,providervpn:enp5s0

flat_networks = provider,providervpn

sysctl.conf

编辑 /etc/sysctl.conf,设置 net.ipv4.ip_forward=1 。

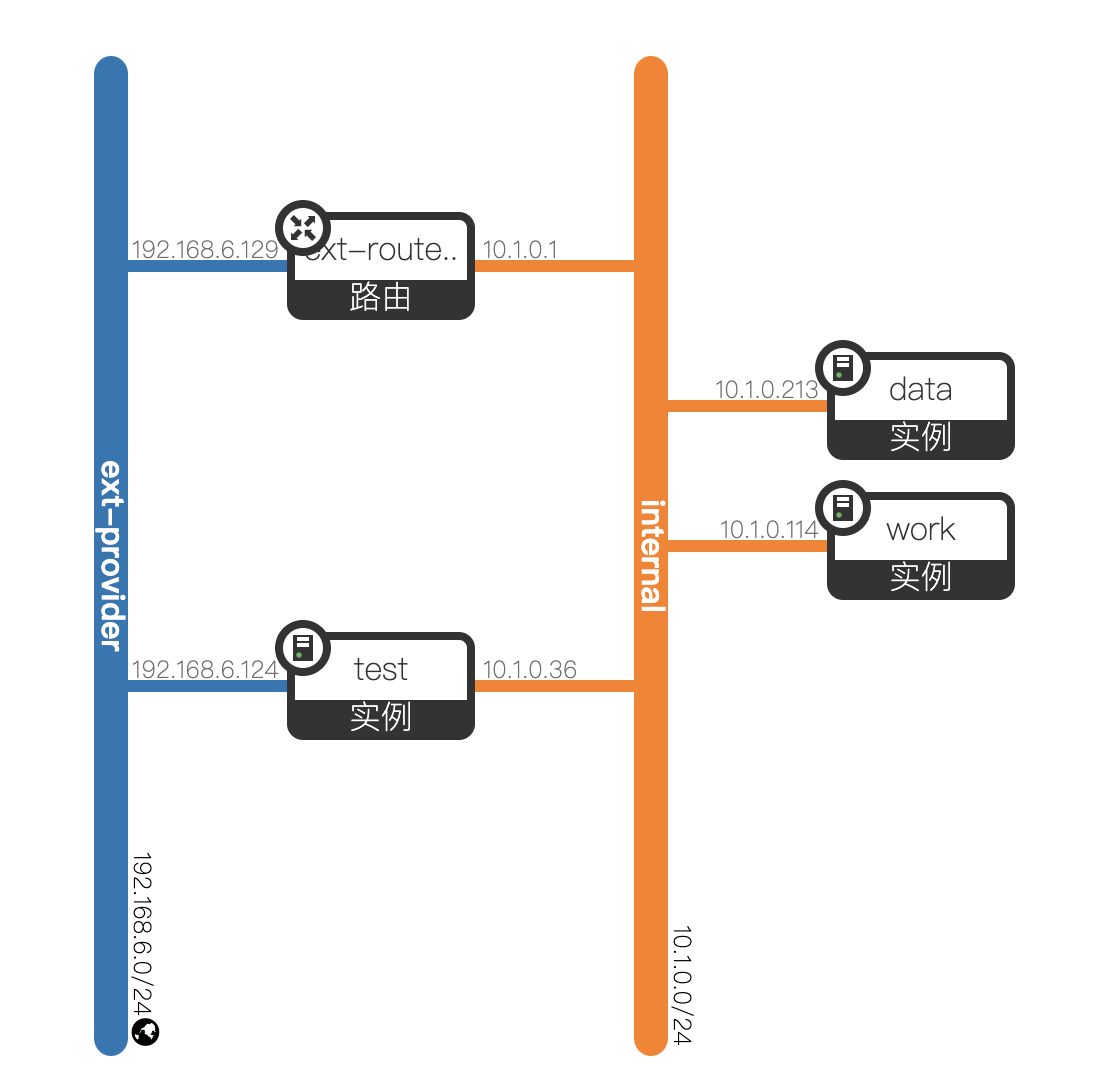

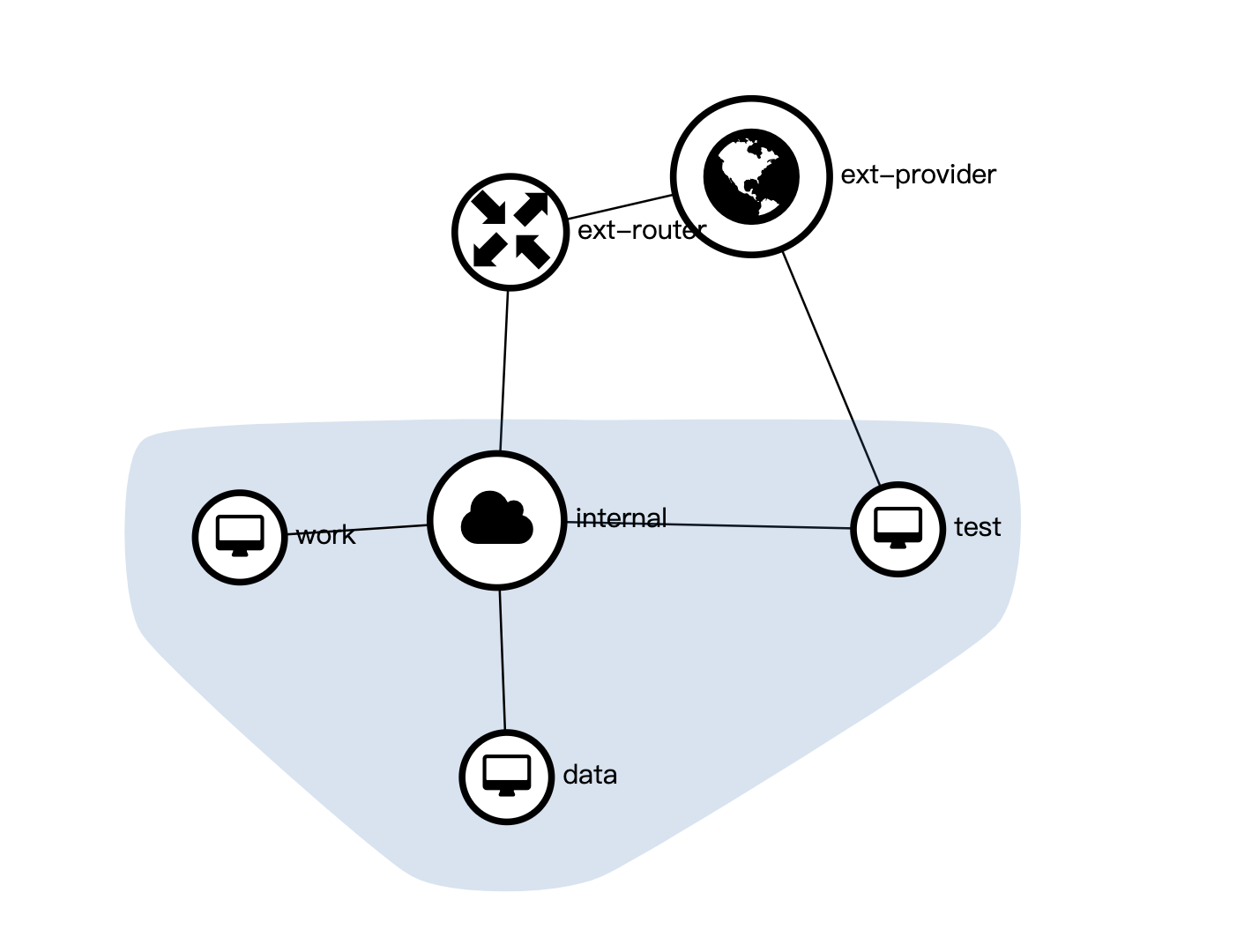

网络拓扑

注意

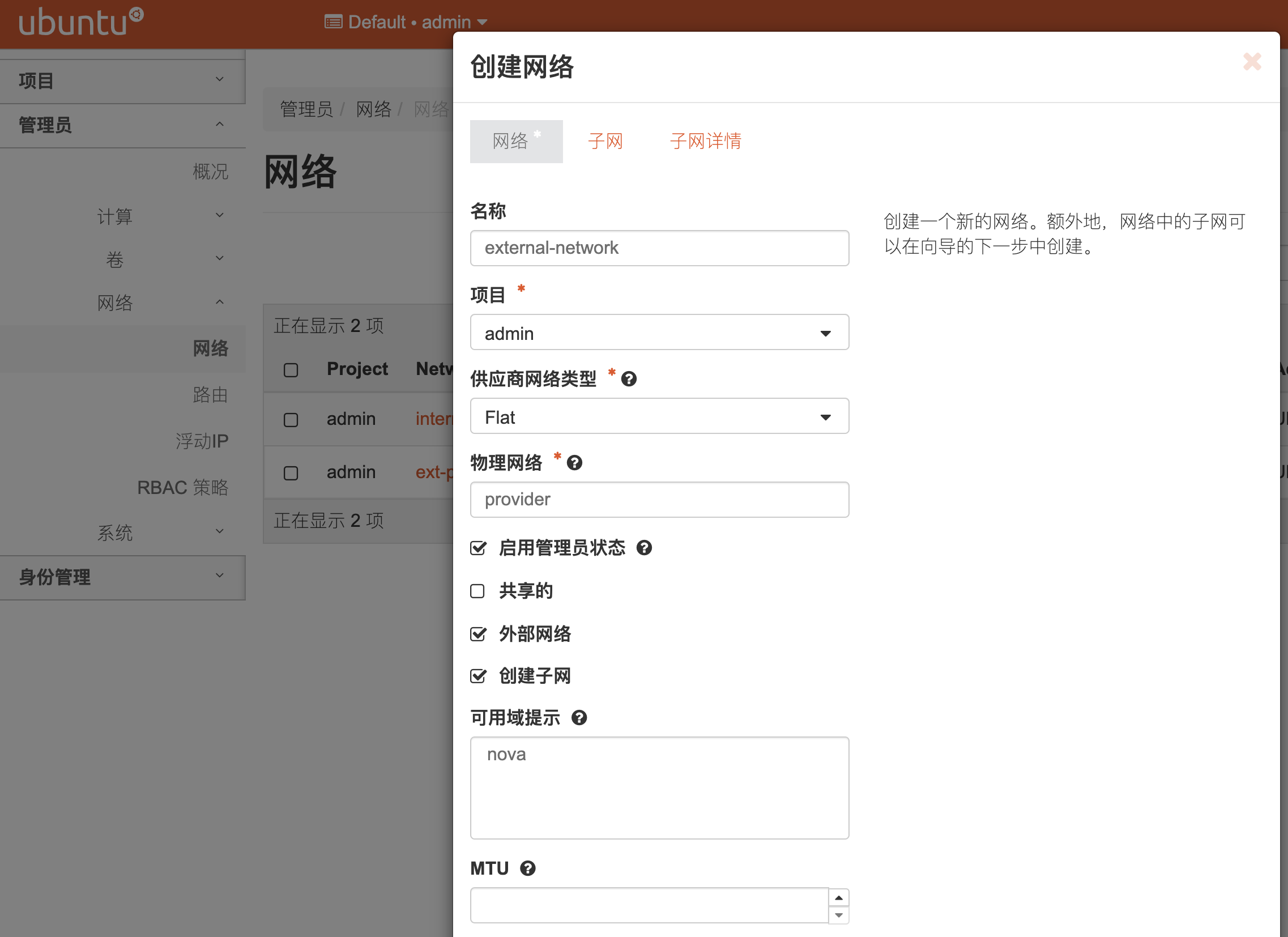

- 创建外部网络用 FLAT 类型,物理网络填 provider(和 linuxbridge_agent.ini 中配置的一致)

- 创建内部网络用 VXLAN 类型

连接外网

首先,要在管理员下创建外部网络。

连接外网有两种方式,一种是实例直连新建的外部网络,一种是创建内部网络,内部网络通过路由连接外部网络。

网络拓扑图中,test 主机是直连外部网络,可以分配 192.168.6.* 地址,以及访问外网,data 和 work 连接内部网络(10.1.0.0/24),然后内部网络通过路由连接外部网络。

分配外部网络 ip

分配外部网络地址有两种方式,直连外部网络和 floating ip。

直连外部网络是给实例添加接口,并指定网络为外部网络,这样在实例里面查看网络信息可以查看到分配的外网 ip。使用 floating ip 的话,将 floating ip 实例分配的内网 ip 绑定,实例内并不能看到 floating ip,外部可以通过 floating ip 访问实例。

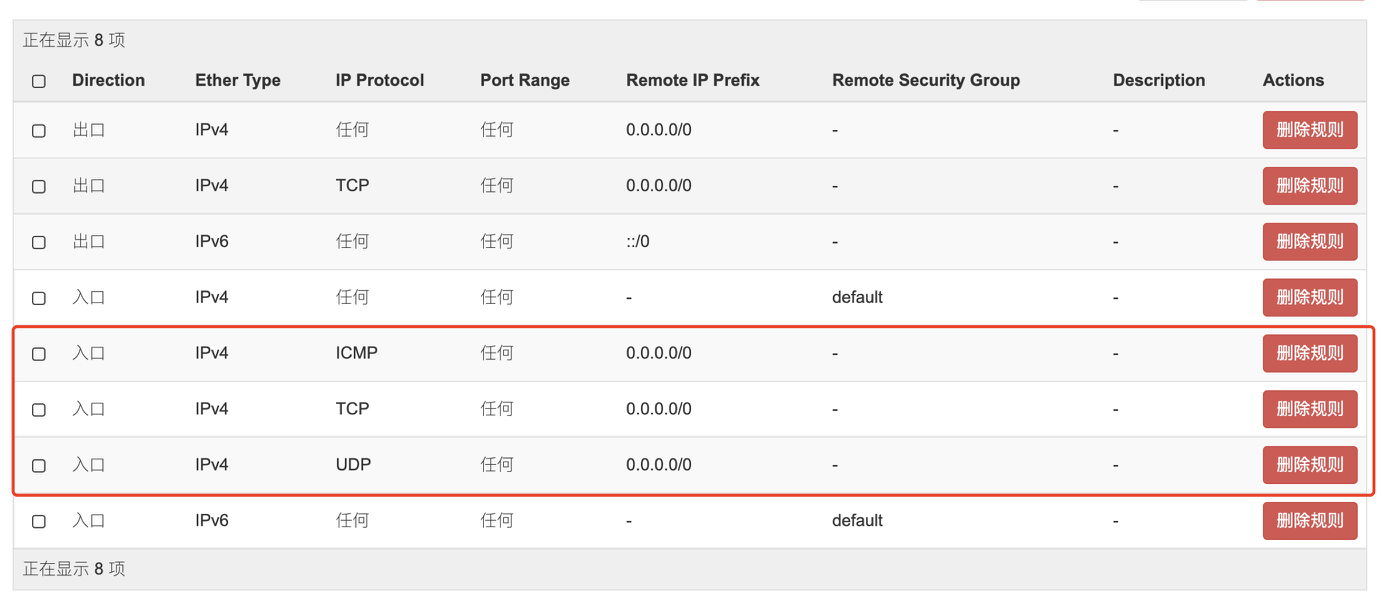

最后一步

openstack 默认的安全组禁止所有请求访问,需要添加入口权限。

distro mirrors

ubuntu

22.04

清华源

1 | deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy main restricted universe multiverse |

20.04

清华源

1 | 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释 |

18.04

清华源

1 | 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释 |

安装 Arch Linux

创建并挂载分区

- 查看硬盘

1 | fdisk -l |

- 使用 fdisk 创建分区表

1 | fdisk /dev/sda |

2.1 创建分区

创建 /boot 分区

1 | (fdisk) n # 创建分区 |

创建 / 分区

1 | (fdisk) n # 创建分区 |

确认

1 | (fdisk) p # 重新打印分区,有新创建的两个分区 |

保存

1 | (fdisk) w |

- 格式化分区

1 | mkfs.fat -F32 /dev/sda1 # 格式化 /boot 分区(假设为 /dev/sda1) |

- 挂载

1 | mount /dev/sda2 /mnt # 挂载根分区 |

安装

1 | pacstrap -K /mnt base linux linux-firmware |

配置

1 | fstab |

Boot loader

1 | GRUB |

重启

1 | exit |

Arch Linux 初始化

中文

1 | 修改文件 /etc/locale.gen |

zsh

1 | 文件 ~/.zshrc |

网络设置

使用 systemd-networkd 管理网络

新增文件 /etc/systemd/network/20-wired.network,下面是静态 IP 配置示例。

1 | [Match] |

启动 systemd-networkd

1 | systemctl enable systemd-networkd |

检查 DNS

1 | resolvectl status |

配置 SSH 远程登录

1 | pacman -S openssh |

启动 sshd

1 | systemctl enable sshd.service |

创建用户及用户组

1 | groupadd -g 100 wii |

普通用户获取超级管理员权限

1 | pacman -S sudo |

必备

1 | pacman -S sudo git curl wget zip unzip base-devel |

Wake On Lan

1 | sudo pacman -S ethtool |

或使用 wol service。

1 | 安装 |

或手动添加 wol servce

1 | sudo tee /etc/systemd/system/wol.service > /dev/null <<EOF |

centos 初始化

配置

1 | 关闭 selinux |

开启 EPEL repository

1 | sudo yum -y install epel-release |